Ihave been feeling nostalgic lately. Several things going on in my life have me reflecting upon the past and thinking about the future – especially as it relates to my life experiences with STEM – Science, Technology, Engineering (or Entrepreneurship), and Mathematics. This is going to be one of my longer articles, but hopefully, it will be fun as I stroll down memory lane. Let’s start with some personal history for context.

I was born in 1967, which places me at the front end of Generation X, normally defined as those born between 1965-1980. Some of you reading this article may think I’m old, and others may think I’m still young. I guess that means I’m somewhere in between. I don’t mind being middle-aged, with all that comes with it.

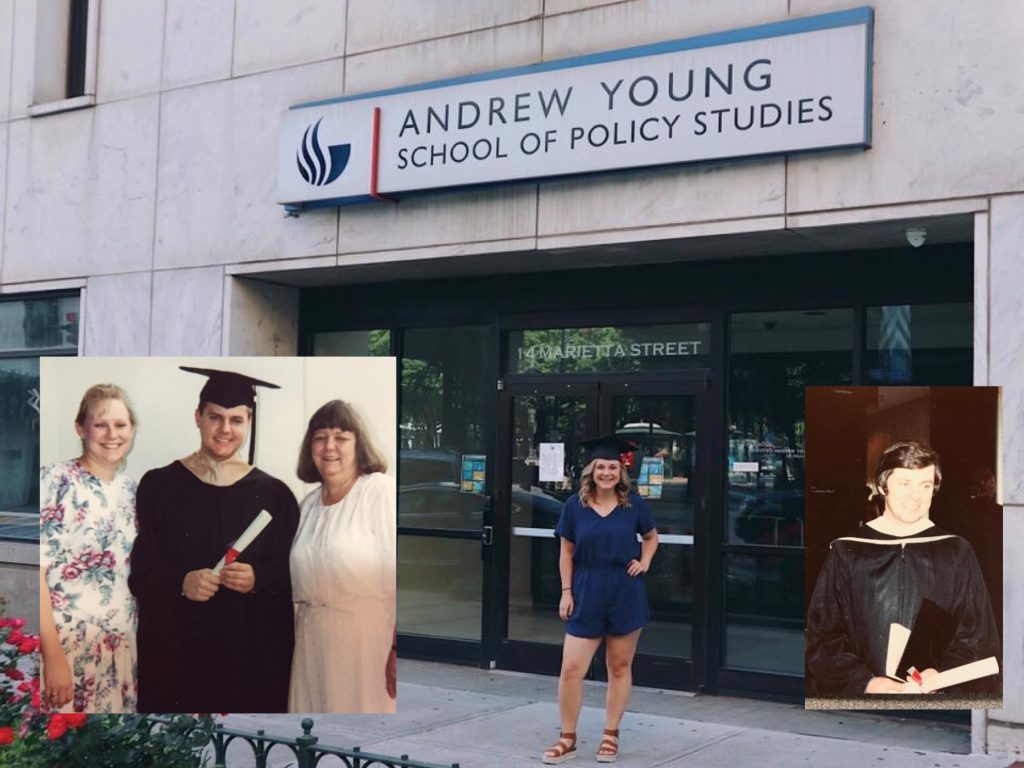

My parents are young; they had me when they were 20. After my father’s service in Vietnam, he started several businesses, and that’s probably where I got my own entrepreneurial spirit. He was the first person on his side of the family to finish college and even earned a Master’s Degree from Georgia State University (GSU) in 1979. We have three generations of GSU scholars in our family. My Master’s Degree is from GSU (MBA ’93), and my daughter Alex completed her Master’s Degree from GSU a few years ago. My younger brother Chris has his doctoral degree from GSU.

Two things happened after my father completed his post-graduate degree in 1979 that influenced my life path in technology. First, Dad accepted a position as a college instructor at DeKalb Community College in Clarkston. It’s still there but is now known as the Clarkston Campus of Perimeter College – GSU (the connection to GSU again). We moved to the area and settled in Stone Mountain, where I attended Clarkston High School (Class of 1985). Like many kids, I wanted to be like my dad and thought it was cool to be a college instructor. The importance and prominence of education made a big impact on me. My dad was the “cool instructor,” and I wanted to be like him.

The second thing that happened around that same time was my introduction to computer technology. Dad taught business data processing, and I was fascinated. Not only was I fascinated by the technology (computers and handheld calculators), but also by the application of technology for solving business problems. He initially used mainframe computers. In fact, I remember going to the computer lab with him to process computer “punch cards” to run programs. That didn’t last long, however, because the personal computer arrived and changed everything. At the college, he used a TRS-80 and an Apple II (my first introduction to Apple). Eventually, he saved enough money and bought a computer for the house—the Osborne 1 “suitcase” computer. I still have it, and it works!

First released in April 1981, the Osborne 1 was the first commercially viable portable computer. It ran the CP/M operating system, which was the predecessor of Microsoft’s Disk Operating System (MS-DOS). Having that computer at home allowed me the opportunity to learn, experiment, and innovate with computer technology firsthand. I feel strongly that kids should have access to resources to be creative and learn on their own. Having a family that emphasized the importance of learning and provided access to the tools needed made all the difference in the world to me.

Now that you know a little about my background, please indulge me as I continue my stroll down memory lane by considering several topics of personal interest in STEM that I have enjoyed and experienced over the years.

Application Software

The Osborne 1 came packaged with the very first “office suite” of software, including WordStar word processor, SuperCalc spreadsheet software, and dBASE II database system. Google Workspace and Microsoft Office are based on those early software systems. Business users were amazed at the power of those early applications, but I was just a teenager at the time, so I wanted to “tinker.” The Osborne 1 also included CBASIC, which gave me the option to explore BASIC computer programming.

Computer Programming

BASIC was my first computer programming language. I used it on the Osborne 1 at home, on the Apple II in my father’s computer lab at the college, and at Clarkston High School. Under the mentorship of Coach Paul Horsley, Clarkston started an innovative new class to give high school kids like me access to computer programming.

I was amazed at all the things I could tell the computer to do using BASIC. To this day, I consider computer programming my original computer science interest. Once I mastered BASIC, I taught myself 8080 assembly language, PASCAL, and COBOL.

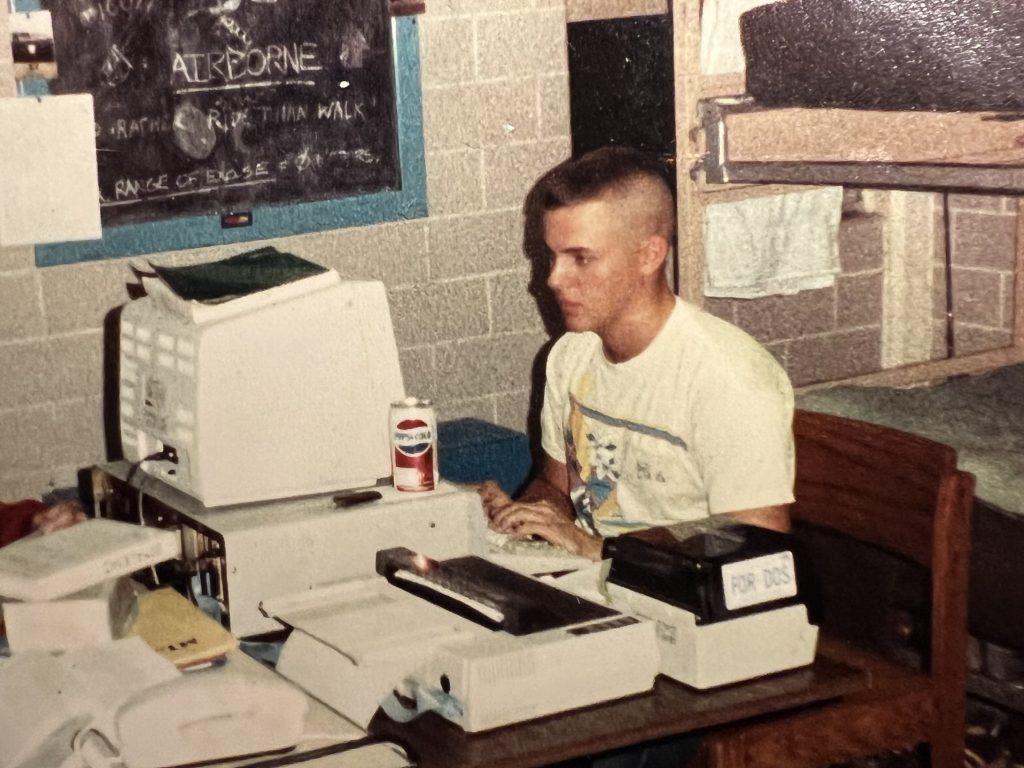

Upon graduation from high school, I enrolled at North Georgia College (now University of North Georgia) in Dahlonega. The Computer Science program was part of the Department of Mathematics, so my studies included both fields. Many of the computer classes were taught by the late Mr. Ernie Elder, who nurtured our sense of exploration as we studied algorithms, computer architecture, and other esoteric programming languages like PROLOG, APL, and C. Dr. Kathy Sisk was also a very positive influence on my studies in math and computer science. Thank you, Ernie and Kathy. I was on an ROTC scholarship at North Georgia and was one of the few cadets who had their own personal computer in the barracks.

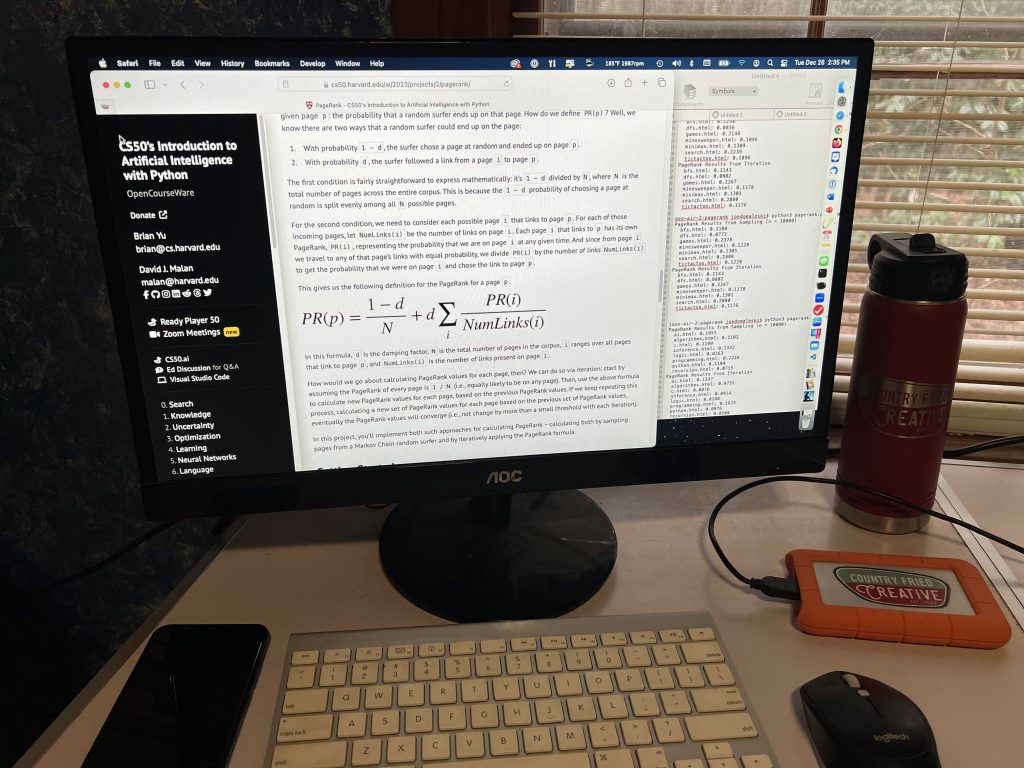

Computer programming has always been a sort of “home base” skill for me, and those skills were further developed later in life when I learned HTML, CSS, PHP, JavaScript, and most recently, Python.

Mathematics

Computer science has its origins in math. I think that students who have a solid foundation in math will find the rigor of computer science easier. One of the earliest influences on my mathematical studies was Sister Bernadine, a Catholic nun who taught an 8th-grade accelerated math class at Our Lady of the Assumption Catholic School, where I attended grades 1-8. To this day, she remains one of the best math teachers I ever had. We learned pre-calculus in 8th grade, and she made it fun. Having that early exposure to advanced math in 1980 helped me understand the mathematical underpinning of modern computer algorithms.

In high school, I continued to nurture my interest in math but also became interested in science as a form of applied math. Trigonometry and calculus made more sense because of physics, and vice versa.

My mathematical journey went much deeper in college because of the curriculum associated with the computer science degree. I was given the opportunity to explore a wide variety of topics such as statistics, probability, linear algebra, discrete math, calculus, and numerical analysis. Linear algebra, in particular, did not seem very relevant at the time but might be one of the most important topics as it forms the basis for neural networks that power generative artificial intelligence (AI). Knowing how matrices and vectors work put me at an advantage when considering the strengths and weaknesses of different AI approaches. Math matters.

Most of my math in graduate school was based on financial analysis using interest rate calculations and net present value. We did use statistics in analysis (data summaries), calculus in economics (maximum/minimum), and linear regression for decision sciences (which I’ll come back to later).

Science

You can’t talk about math without talking about science and vice versa. Going back to middle school, I’ve always enjoyed science and participating in science fairs. Electronics, physics, and astronomy were always my favorites. Physics, in particular, really interested me, and that ended up being my minor at North Georgia. During my studies, a book came out that really inspired me—”Chaos: Making a New Science” by James Gleick. This book, released in 1987, introduced the world to chaos theory and the work of the Santa Fe Institute.

Simply stated, chaos theory (or complex non-linear dynamical systems) is an interdisciplinary field that brings together math and physics to study systems that appear to be random (or chaotic) but are not. Attributes of chaotic systems include sensitivity to initial conditions, self-similarity, determinism, feedback loops, fractals, and self-organization. Examples include fractals, weather, flocks of birds, the spread of fire, economies, viral behavior on the internet, and so forth. Perhaps you’ve heard of the “Butterfly Effect“?

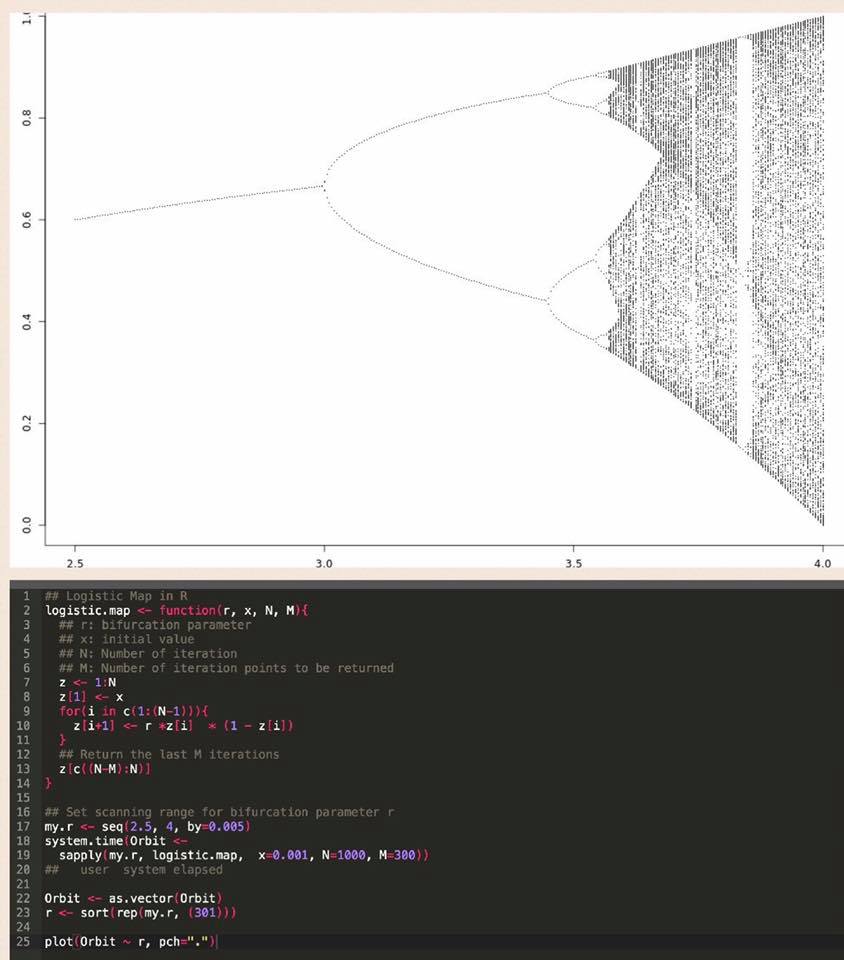

In 2015, the Santa Fe Institute Complexity Explorer program began to offer some online courses in a variety of related complexity topics. The classes weren’t easy and required the use of statistics, calculus, and specialized programming languages like NetLogo for modeling agent-based systems. I had a blast. Here’s a screenshot of one of my R programs to plot the famous “logistic map.” Fun stuff, to me at least.

Personal Computers

Ever since Dad brought home the Osborne 1 in 1982, I’ve had access to a personal desktop computer. Over the years, the size of my computers has decreased as their processing power has increased. At the time in the ’80s, it was quite a revolution to put the power of mainframe computers on everyone’s desktop. Issues about data access, security, standardization, and support still create struggles for the modern enterprise. Yes, back in the ’80s and early ’90s, many people in Information Technology opposed giving users that much power. Echoes of that battle are still being heard over the use of portable computing devices like smartphones and tablets at work and in the classroom. Although it’s not without issues, I do believe having access to personal computing devices is a good thing, even though I like to unplug once in a while too.

Expert Systems

After learning how to program computers in the early ’80s, I began to wonder about ways to make computers even more helpful. I first became exposed to the term “Artificial Intelligence” in a mid-’80s article in the now-defunct “BYTE” computer magazine. The article talked about ways to codify human knowledge into so-called expert systems to solve problems. A special declarative programming language called PROLOG (Programming in Logic) was developed to do just that. Developers would code rules and facts in PROLOG, and the computer would infer solutions. It worked a lot like those logic puzzles and games like Clue. You lay out all the facts and try to determine “who done it.”

I learned about PROLOG at North Georgia in an Introduction to Programming Languages survey class. I continued experimenting with it using a derivative language called Turbo Prolog. Somewhere in my house is a copy of Turbo Prolog on a floppy disk. Although fun for solving simple problems, it became readily apparent that coding up all the rules was very labor-intensive work. If an expert system was to help solve problems, then knowledge engineers would have to code all known knowledge about the problem as rules. Interest in expert systems began to die down in the ’90s although marketers continued to use that term as a buzzword all the way up to 1999 when the so-called “millennium bug” became the focus of interest.

Neural Networks

I had never heard of a neural network until I watched Star Trek: The Next Generation on TV. In that show, the android “Data” had a positronic brain powered by a neural network. Knowing that Star Trek liked to maintain a basis in science, I looked up the term and discovered that it was a real thing. Originally conceived in 1957 as a “perceptron” by Dr. Frank Rosenblatt, it was a way to pattern a computer architecture after the neurons in the human brain.

It’s beyond the scope of this article to recount the history of neural networks, but there’s plenty of information available online, including this summary by Wikipedia. Neural networks fell out of favor during the “AI winter” and didn’t really re-emerge until the late ’80s as the limits of expert systems were beginning to be discovered. An innovation using calculus to perform backpropagation allowed neural networks to “learn” and optimize.

Neural networks popped back up on my radar screen in graduate school. As part of my MBA curriculum, I had to take a Decision Sciences class by a young professor named Dr. Alok Srivastava. Decision science is sometimes known as forecasting, analytics, and data science these days. Regardless of what you call it, it’s really the application of mathematical tools to help make business decisions. Before “big data,” we did a lot of that analysis using spreadsheets, this time Lotus 123 on a personal computer. After class one day, I mentioned to Alok that I knew C programming and thought some of our spreadsheet assignments would be better handled by a computer program. He pulled me aside and asked me, “What do you know about Backpropagation Neural Networks?”

We collaborated on some financial and economic data analysis using neural networks written in C. One of the early reference books we used, because textbooks still didn’t have much to say on the subject, was a new book entitled “Neural Networks in C++, An Object-Oriented Framework for Building Connectionist Systems” by Adam Blum (Wiley, 1992). I still have a physical copy of the book somewhere on my bookshelf. What we learned then, and is still true today, is that neural networks are excellent at pattern recognition. Based on linear algebra, statistics, and partial derivatives (calculus), neural networks have the seeming ability to “self-organize” given proper training—much like a human. Alok encouraged me to continue my studies to pursue a Ph.D., but I opted to enter the business world with my MBA, get married, and start a family. Unfortunately, Alok passed away in 2010 due to complications from diabetes.

Honestly, I had forgotten much of that early work I did in neural networks because of the rise of the Internet in the late ’90s. Much of AI research stalled out again in another “AI winter” as everyone became fascinated with the internet and web-based technologies. In fact, even the term “AI” had some ridicule associated with it as a failed promise going all the way back to the ’50s. Little did we know that neural networks would resurface later to power modern AI including generative AI such as ChatGPT.

Internet

There’s no doubt that the Internet has had a major impact on my life and the lives of just about everyone. My first email account was a GSU account back in the early ’90s when most internet usage was restricted to government and academic use. Outside of school, we used dial-up modems and computer bulletin boards (subject for another article). Before there was the World Wide Web (simply “the web” now), I used something called Gopher, “go fer,” to retrieve documents and information on the internet from the GSU computer lab. Gopher was a text-only, menu-based system. It fell out of favor when HTTP, HTML, and the web spawned the creation of websites.

Being a book and information nerd, I was fascinated by having the ability to sit at a computer and look stuff up using Gopher and then web browsers. To this day, I still love to go down “rabbit holes” by looking up esoteric facts and information online. This newspaper website, where you are reading my article, was one of the first commercially viable websites in Fayette County and the first local newspaper to go online.

We should probably make a distinction between several related terms. The Internet is a network that connects computers all over the world. The web is a system of websites (residing on computer servers) containing web pages to view information and handle transactions. The web is accessible through the internet. The internet is also used to send/receive electronic mail (email) and pass data between applications on your computer, phone, tablet, and other devices.

Web Projects

During much of the ’90s, I served in various consulting roles related to the business application of technology. Beginning with the commercialization of the Internet and the Atlanta Olympic Games in 1996, I decided to steer my career in the direction of web project management. The Olympic website was built on IBM technologies. As one of the first IBM-certified Internet Architects, I became an expert in developing large-scale e-commerce websites in the late ’90s and especially into the early 2000s.

I feel strongly that a project manager should have the ability to “do the work” and not just direct it from afar. Not everyone agrees with that philosophy. Maybe it comes from my military experience where everyone carries a weapon. Regardless, I taught myself HTML (the language of the web) and TCP/IP (the architecture of the Internet) so that I could be a better web project manager. To this day, I can code websites by hand using a simple text editor. I don’t do it often, and my staff prefers it that way!

In fact, I enjoyed building websites so much that in March 2003, I left the corporate world to start my entrepreneurial journey by building websites.

Entrepreneurship

You can read more about why I started my business in my very first article. As I’ve said many times before, running a business has been both the hardest thing I’ve ever done and one of the most rewarding. Nothing I learned in business school or as a consultant fully prepared me for the ups and downs of running a business.

Now in our 21st year, I’m still learning things about running a business as I go along. Besides the points made in two previous articles: things I most like about running a business and the things I don’t like about running a business, two other insights have become apparent.

First, most people are not cut out to be entrepreneurs. The ones that are have to be driven by passion, not greed. There are a lot easier ways to make money besides starting a business. This is going to ruffle some feathers, but if your primary goal is to make money, do something else. If you have a dream and think you can make money chasing that dream—go for it. You will learn so much and help so many people along the way, but be prepared for disappointment and rejection.

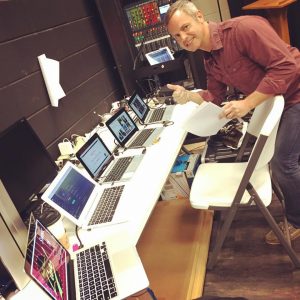

Second, my business would not have succeeded without having a solid basis in technology. It’s true that the heart of our business is people—employees, clients, and supporters. However, our interactions wouldn’t work without great technology and the knowledge to use it. We literally use technology for everything we do in order to create outstanding marketing programs and campaigns for clients.

In short, I can trace quite a bit of my own entrepreneurial passion and drive back to tinkering with computer programming when I was a teenager. I had no idea at the time that the Internet and web would even come to pass, and now I own a company that offers that service. Lesson learned—we don’t know what the future holds or where life will take you. You may be doing things now that will be obsolete in the future and things that may not have been invented yet could become your livelihood. Let’s return to something from my early career that has resurfaced—AI.

AI and Neural Networks Revisited

Artificial Intelligence became fashionable again last year with the sudden appearance of generative AI in the form of ChatGPT and others in 2023. What’s old is new again. Ironically, it was because of the Internet that neural networks faded from the spotlight and then experienced a resurgence around 2010.

The convergence of “big data” from the internet and faster processors in the form of GPUs has caused renewed interest in artificially intelligent applications. AI, often powered by neural networks, started showing up under the radar in things such as recommendation engines, mapping, image recognition, and data analysis. As I mentioned in a previous article, these types of AI were marketed as “smart” or “intelligent” systems due to the continued stigma associated with the term “AI.”

All of that changed again last year, and now AI is in the spotlight again. I think we’re all suffering from AI-overload. Truth is, no one knows where it’s heading. Will AI take over the world and make humans obsolete, or will it peak because of a flattening of the innovation curve and lack of training data?

As for me, I’m excited. Because of my life experiences, I’ve seen situations like this before when a disruptive technology seemingly shows up out of nowhere. I wasn’t alive during the industrial revolution, but most of my life has been spent as an active participant in the information revolution. When personal computers arrived around 1980, it took about a decade but things changed dramatically. Entire industries were created just as other industries died out. When the Internet and web came to be, it created enormous economic opportunity but also killed off other things like printed phone books (which used to be a billion-dollar business). Smartphones have combined the power of computers and the Internet to put a lot of power in everyone’s hands. Yes, the emergence of generative AI is huge, maybe one of the biggest things to ever face humanity, or maybe not. We don’t know.

The Future

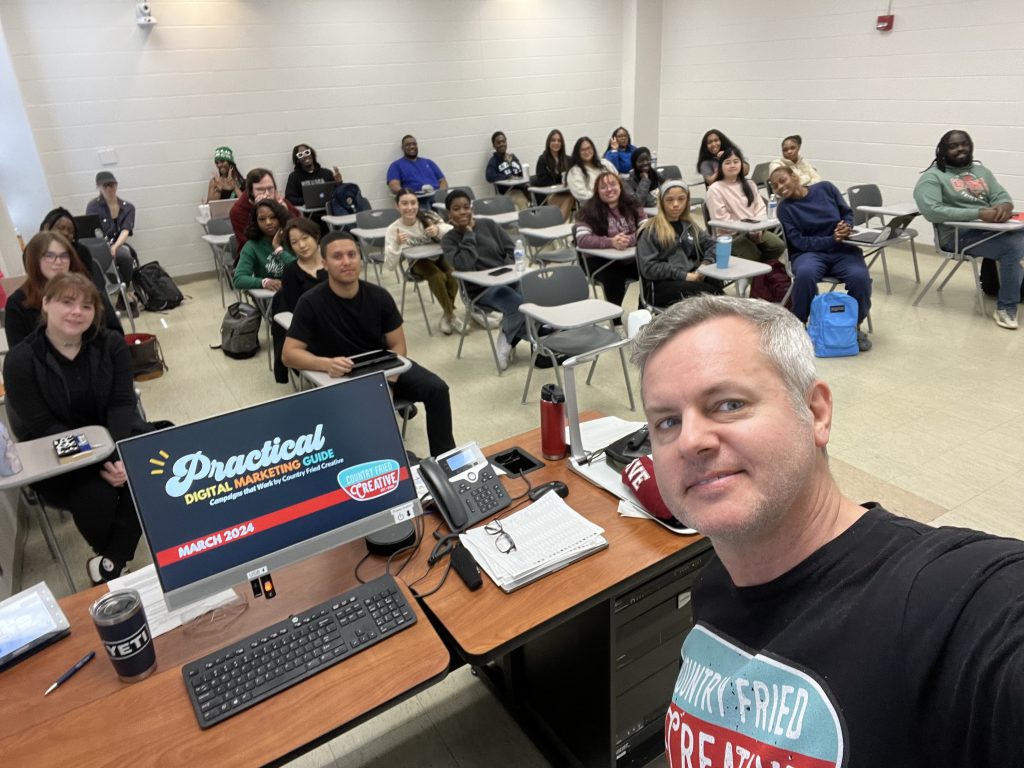

Recently, I had the opportunity to interview a young data scientist for a possible internship position with my company. He will soon graduate with a Master’s Degree in data science and is exploring options. As we plan for expanding our range of digital marketing services into new areas, it’s important to bring in talent. During our discussions, I was very impressed by his enthusiasm for AI and data science. I told him that he reminded me of myself 30 years ago. He was surprised to find out that an old guy like me knew how to implement deep learning (a form of neural network AI) in Python. He also didn’t know that neural networks had been around for so long and that someone in the business community actually understood and used linear algebra and partial derivatives for marketing analysis. There’s no question that his skills are more current than mine, but it’s important for old guys like me to keep up with the young ones.

As for me, I’m glad that my career had a firm foundation in STEM (Science, Technology, Engineering (and Entrepreneurship), and Math). A good solid STEM foundation can prepare you for life in ways that you can’t imagine. Even if AI can do a lot of the work for you, a solid foundation of knowledge in a human brain is essential to make sure the right questions are asked of AI and the computer-generated solutions are properly interpreted. For now, at least, AI can’t read our minds so we have to be able to guide it. STEM can help you do that.

Thank you to everyone who was a mentor or teacher to me over the years. Thanks also to all of the interns, colleagues, current and former employees who gave me the opportunity to share my experiences with you. How are you preparing yourself and those around you for the future?

[Joe Domaleski, a Fayette County resident for 25+ years, is the owner of Country Fried Creative – an award-winning digital marketing agency located in Peachtree City. His company was the Fayette Chamber’s 2021 Small Business of the Year. Joe is a husband, father of three grown children, and proud Army veteran. He has an MBA from Georgia State University and enjoys sharing his perspectives drawing from thirty years of business leadership experience. Sign up for the Country Fried Creative newsletter to get marketing and business articles directly in your inbox. You can connect with Joe directly on LinkedIn for more insights and updates.]

Leave a Comment

You must be logged in to post a comment.